Automatically Exporting Cloudwatch Logs to S3 With Kinesis and Lambda

Amazon CloudWatch is a great service for collecting logs and metrics from your AWS resources. Previously it has been challenging to export and analyze these logs. The announcement of Kinesis subscriptions for CloudWatch enables a whole new way to work with this service.

This blog post will explain how you can leverage Lambda to create automated data pipelines for CloudWatch, with no dedicated compute resources. The focus will be on exporting logs to objects in S3, but the same concept can be used to enable any sort of custom processing within the constraints of Lambda.

Exporting to S3 is a good place to start as this allows you to run Hive or other log processing software on the exported logs. The source we will as use an example is CloudTrail, however the same method applies to any CloudWatch log. It’s worth noting that CloudTrail logs can already be automatically exported to S3 within the AWS console, it is simply used as a convenient source for the log group if you dont have any existing sources.

Before Starting

You will first need to set up a CloudWatch log group. Sending CloudTrail Events to CloudWatch Logs explains the steps required if you want to use CloudTrail as the source.

Once you have a log group setup follow the steps in Real-time Processing of Log Data with Subscriptions. This documentation is quite new so I did come across a few gotchas:

- make sure your aws-cli is completely up to date, you will know it’s not if

aws logs put-subscription-filteris not a valid command - Configure your Kinesis stream within us-east-1, eu-west-1 or us-west-2, as your stream needs to be in the same region as your Lambda functions for the next steps

- if you are following all the steps in the guide it’s actually

base64 -dnotbase64 -D

When you have finished setting up your stream it will be useful to copy for the output of aws kinesis get-records for testing your function later.

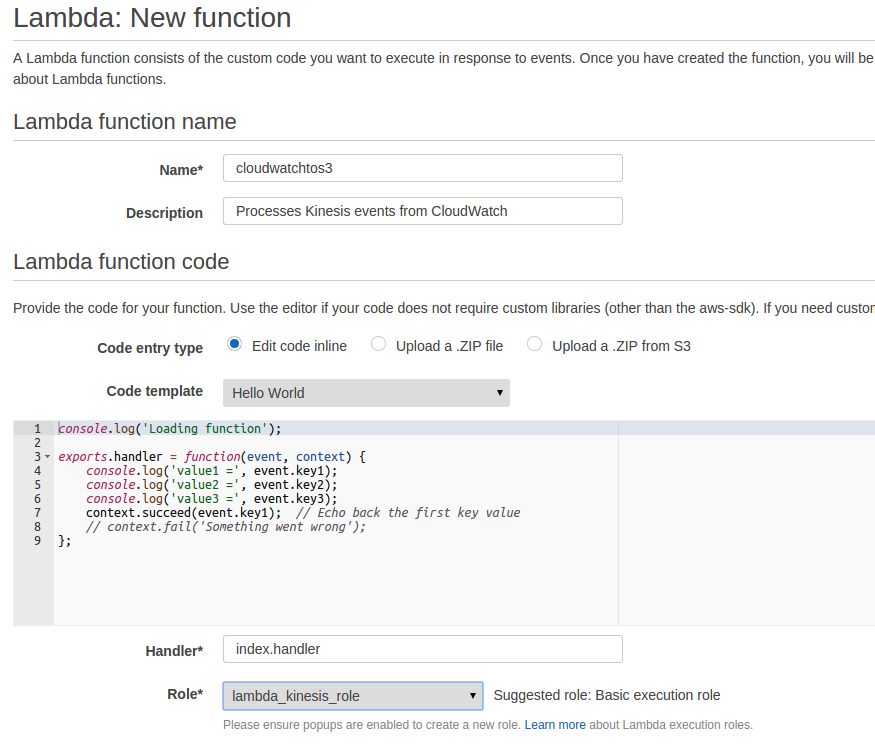

Create a Lambda Function

Ok so now you have a Kinesis stream subscribed to your log group. The next step is to build a Lambda function that will process the events fired by this stream. A great tool for creating lambda functions is grunt-aws-lambda.

Start by creating a function with the hello world template.

For the role it is recommended to start with a new Kinesis Execution role, and then editing it to add S3FullAccess.

Once this is created follow the instructions in grunt-aws-lambda to set up a new project.

The key files you will need to configure are.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 | |

Remember to change your_bucket_name to your own S3 bucket.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | |

Remember to change the function name if you have picked a different name, likewise with the region.

To test your function use the following, replacing base64_gzipped_content. A good source for the kinesis data is the output from aws kinesis get-records from when you set up the Kinesis stream.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | |

You can then test your function with grunt lambda_invoke

If all went well there should now be a logfile cloudtrail_timestamp in your s3 bucket.

Once you are happy with the function you can grunt deploy

Now your function is deployed to Lambda and ready to be used

Add Kinesis Event Source

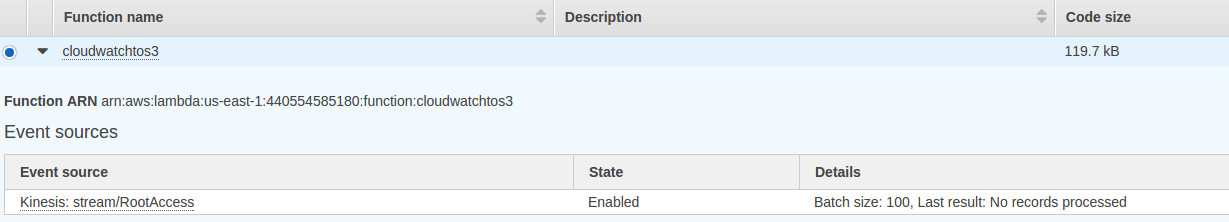

The final step is to add your Kinesis Stream as an event source for your lambda function.

aws lambda create-event-source-mapping \

--region us-east-1 \

--function-name cloudwatchtos3 \

--event-source "the_arn_of_your_kinesis_stream" \

--batch-size 100 \

--starting-position TRIM_HORIZON \

When you go to your Lambda function list you should see the following.

Once the batch is ready the Details will change to Batch Size: 100, Last result: OK. You should also see the log files appearing in your S3 bucket. If there is a error you can check the logs, likely candidates are the wrong permissions. Additionally if you are getting timeouts you can up the timeout limit, I upped it to 10 seconds from 3.

Finishing Up and Next Steps

If you are just testing this process out don’t forget to delete your Kinesis stream, log group and S3 Bucket afterwards.

Now that your log data is in S3 analysis options become extremely flexible. You could import it into EMR or download the data for your own analysis.

Of course with this concept you aren’t limited to just exporting to S3. You could use a restrictive subscription for critical errors and trigger alerts within Lambda. Or you could upload logs as documents to CloudSearch. Logs are a powerful construct for data infrastructure and this concept enables you to build this infrastructure without managing your own computing resources.

Pricing

Pricing is hard to predict as it depends on volume and timing of log generation. Kinesis will be the biggest driver of costs, with the cost of a single shard and PUT requests coming to around $15 a month. The best way to test pricing is to run the resources for a couple of days with the log that you want to export. Once you are done you can simply delete the Kinesis stream to avoid any further costs. The cost of exporting CloudTrail for 2 days came to under $1 however CloudTrail has a fairly low volume.